About

A problem-solver at heart with a love for clean code and creative solutions. My journey in tech has been fueled by curiosity, coffee, and a relentless desire to tackle the next challenge. Whether it's building apps or debugging the toughest issues, I’m always up for the task. Let’s make something amazing together!

Data Analytics and Engineering Professional & Developer.

Analytics/Data Engineer with 5+ years of experience (including Masters) in building scalable data models, optimizing ETL pipelines, and driving actionable insights. Skilled in SQL, Python, Snowflake, and AWS, with a focus on data quality and performance. Experienced in enabling data-driven decisions through collaboration and innovation.

- Birth Year: 1997

- Website: jainvaibhav62.github.io

- Phone: +1 (857) 529-8250

- City: Frisco, USA

- Age:

- Degree: Master's in Data Analytics

- Email: jnvaibhav97@gmail.com

- New Job Oppurtunity: Available

I’m driven, adaptable, and constantly evolving—ready to bring innovative solutions to any team. Let’s transform challenges into success, and build the future of tech.

Skills

A finely-tuned arsenal of tech tricks and tools—polished through curiosity, coffee, and countless “just one more bug” moments.

Resume

All the credentials, experience, and carefully-placed buzzwords—wrapped in a neat package, minus the corporate jargon overdose.

Sumary

Vaibhav Jain

User-focused and impact-driven Data Engineer with a passion for building scalable data systems and delivering clean, efficient solutions. Known for turning complex problems into streamlined pipelines with clarity, creativity, and code.

- Frisco, Texas

- +1 (857) 529-8250

- jnvaibhav97@gmail.com

Education

Master of Data Analytics & Engineering

2021 - 2023

Northeastern University, Boston, MA

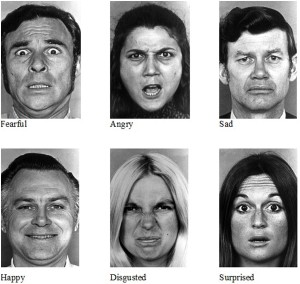

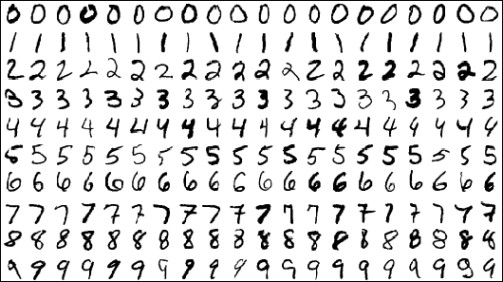

Relevant Courses: Big Data, Probability & Statistics, Machine Learning.

Bachelor of Computers & Engienering

2015 - 2019

Narsee Monjee Institute of Management Studies, India

Relevant Courses: Data Structures and Algorithm, Operating Systems, Compiler Design, Big Data

Professional Experience

Data Engineer

June 2023 - Present

Toyota, Plano, TX

- Collaborated closely with Finance teams to develop SOX-compliant automated SQL queries, reducing audit validation time across multi-environment systems and driving process efficiencies.

- Developed end-to-end data pipelines using Python and SQL to ingest and transform data, applying Data Vault techniques in Snowflake to ensure data integrity and scalability across Finance-related processes.

- Contributed the migration of on-premises data infrastructure to the cloud, ensuring minimal downtime and data integrity throughout the transition.

- Designed and implemented scalable data engineering frameworks, enabling teams to automate Data Vault load processesand streamline real-time data ingestion, impacting strategic financial insights for over 1,000 internal stakeholders.

- Leveraged AWS event services to build event-driven data workflows, automating real-time data ingestion from S3 to Finance reporting layers, enhancing timely data delivery for decision-making.

Analytics Engineer

May 2023 - June 2023

Sezzle, Remote

- Utilized AWS Redshift and SQL to assist in maintaining and optimizing existing data pipelines.

- Contributed to the developement of DBT model and supported the design of Redash dashboards for visualizing key metrics.

Data Analyst

Jan 2022 - July 2022

TakeOff Technologies, Waltham, MA, USA

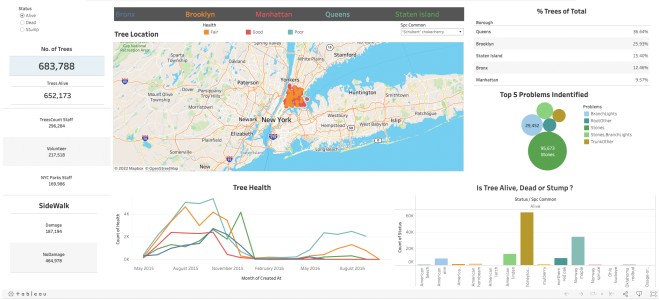

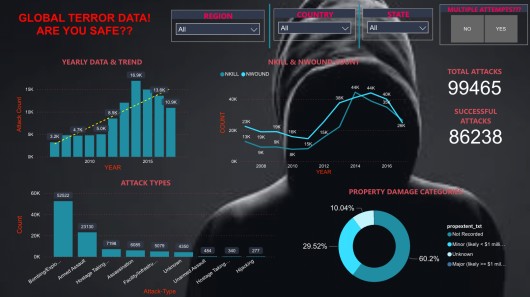

- Built out the data and reporting infrastructure from the ground up using Looker and SQL to provide real-time insights into the product, marketing funnels, and business KPIs.

- Built operational reporting in Looker to find areas of improvement for contractors resulting in quaterly incremental revenue.

- Worked with stakeholders to understand business needs and translate those needs into actionable reports in Looker and Snowflake, saving 18 hours of manual work each week.

- Presented presentations concerning ad-hoc research and findings from disparate sources to upper-level management.

- Utilized techniques and business intelligence (Looker) to create 15+ dashboards and 25+ ad hoc reports to address business problems and streamline processes.

Associate Software Engineer

July 2019 - Dec 2020

Accenture INC, India

- Co-developed the SQL server database system to maximize performance benefits for clients.

- Developed Custom ETL Solution, Batch processing, and Real-Time data ingestion pipeline to move data in and out of Hadoop using Python and shell Script.

- Experienced in writing complex SQL Queries, Stored Procedures, Triggers, Views, Cursors, Joins, Constraints, DDL, DML, and User Defined Functions to implement business logic.

- Worked extensively with Data migration, Data cleansing, Data profiling, and ETL Processes features for data warehouses.

- Designed and published visually rich and intuitive Tableau dashboards and Crystal Reports for executive decision-making.

- Extensively worked on Data validation between Hive source tables and target tables using automation Python Scripts.

Data Engineer Intern

May 2018 - July 2018

CatchSavvy Solutions, India

- Strategized ETL processes and maintained Data Pipelines across millions of rows of data which reduce manual workload by 43%.

- Maintained large databases and used various professional statistical techniques to collect, analyze, and interpret financial data from customers and partners; also responsible for carrying out A/B testing.

- Contributed to the design and development of new quantitative models and Data Warehouse to help the company stabilize and maximize efficiency.

Technology Analyst Intern

May 2017 - July 2017

Nextsavy Technologies LLP , India

- Identified and derived the key features from unstructured data by converting from HDFS to RDBMS using MySQL.

- Maintained large databases and used various professional statistical techniques to collect, analyze, and interpret data from customers and partners.

Testimonials

I've had the privilege of working alongside some incredible people. Here's what they had to say about our time together.

Contact

Let’s make it official—here’s how you reach the brain behind the brilliance

Location:

Frisco, Texas

Email:

jnvaibhav97@gmail.com